By Siobhan King |

November 1, 2024

AI hallucinations

So, you’re thinking about embarking on an AI project, but how do you know if the outcome will be worth the effort? Especially since open AI tools on the web have become prone to problems, particularly AI hallucinations, which are simply not acceptable within any enterprise AI solution you may want to adopt.

Our Senior Consultant, Siobhan King, introduces us to ontologies, grounding and RAG and how these techniques can leverage your data and support AI success

What are AI hallucinations?

AI hallucinations are when a Large Language Model (LLM) generates false or misleading information, often in response to prompts put into Gen AI tools such as Microsoft Copilot and ChatGPT. This hallucination usually happens because the LLM perceives patterns or relationships between things which don’t exist.

The big problem to solve over the past few years has been AI hallucinations and much development has gone into mitigating this issue. Unsurprisingly, the answer seems to be to provide greater context to LLMs to produce better outputs. Which is what those in the information management profession have known for years.

What are LLMs and how do they work?

To understand AI hallucinations and how they happen, a basic understanding of what LLMs actually do is helpful. LLMs are parsing very large quantities of text (think tens of billions of items) in order identify semantic and syntactical patterns and predict outputs based on probability. This is mathematically expressed as:

P(tn ∣ tn−1,tn−2,…,t1)

Or a much simpler illustration might be using probability to finish a sentence. For example, you might ask an LLM to finish the following:

“All we need is …”

The LLM may predict the probability for each of the following words being used to finish the sentence:

- Love (60%)

- Each other (40%)

- Hope (20%)

- Time (20%)

When an LLM is responding to ChatGPT and Copilot prompts, it is doing something like this but on a much larger and more sophisticated scale. These common tools are basing probability on vast amounts of data which is ingested during an initial training phase. Because of this scale, they are successful at completing generalist tasks but when it comes to more specific and detailed queries, they do not always perform as well. One of the problems present in these tools is AI hallucinations.

Reasons for AI hallucinations can range from poor data quality, poorly crafted prompts or that the data used to train the LLM is very out of date. There are also more difficult to define reasons because the statistical probability and most common patterns are not always the correct answer.

Grounding and Retrieval Augmented Generation

To mitigate this issue, AI developers have introduced “grounding.” There are several other options such as fine tuning, multi-prompts, etc., but grounding your data overlaps with what needs to be done for most organisations wanting to use AI.

Grounding data means that you are using your domain data to inform the outputs from your LLM. This is referred to as Retrieval Augmented Generation (RAG), as you are augmenting the LLM by making your own data sources available for retrieval.

Reducing the number of AI hallucinations is not the only reason you might want to do this. Most organisations thinking of using AI will have a desire to leverage all the data they currently hold.

How RAG works

RAG most commonly uses vectors to parse your enterprise data before handing it over to the LLM. RAG sources within your organisation can be held in databases, websites, or sets of documents. For the purpose of the RAG, this data is broken into chunks (yes, this is the technical term) and given a unique identifier number known as a vector.

The more closely related the concepts within the chunks of data, the more similar the vector is going to be. For example, the concept of “tree” may have a vector of 1118 and “leaf” may have a vector of 1119.

Any query that is put to the LLM is also given a vector, so that the question may be matched with the closest chunks of information. So a question about “woods” may be given a vector of 1117, which pretty close to our 1118 and 1119 vectors.

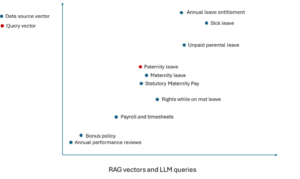

To illustrate, this diagram roughly shows how closely vectors would relate on different HR policy topics (in blue). Maternity leave has vectors that are very close to Statutory Maternity Pay and are somewhat close to Rights while on maternity leave and Unpaid parental leave as topics. Other topics, such as sick leave and performance review, are seen as more distantly related. The query that has been put to the LLM is regarding paternity leave (in red) and this is shown as most closely related to the maternity leave policy. The LLM will then return with an answer to the user with the more most closely related vectors.

For RAG to work well, it is strongly advisable to have well-defined hierarchies in place to help organise and “chunk” your information. Good hierarchies help RAGs and LLMs make sense of your information and more accurately predict probability and deliver better results. It’s not just about the quality of your data but also the quality of your structure.

Using RAG is better than relying on pure probability but it’s still an approximation rather than a real understanding of context. It only goes some of the way to solving problems like AI hallucinations. To really provide context to improve results you need something called an ontology.

What is an ontology?

According to Futurist Ross Dawson of Informivity, ontology is a strong candidate for word of the year for 2024.

Increasingly, we are seeing those working in the AI space talking about the importance of ontologies, and the power of integrating these into AI tools. But what is ontology and how can it lead to better LLM outputs?

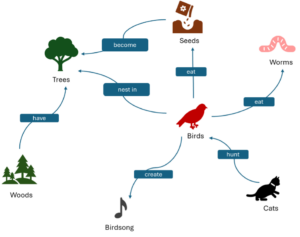

An ontology is a way of classifying and defining the relationships between different concepts. Ontologies often present graphical representations of concepts, categories and relationships. They provide a framework for understanding your domain; they are tools to provide context and make sense of your world.

Ever been shopping online, say for hiking boots, and received a pop up saying “You might also be interested in hiking socks”? Ontology is powering an interaction like this, as most commercial websites use ontology to relate products to each other and make them easier for customers to find.

Below is a very simple ontology which demonstrates how relationships are graphically defined and represented. (Most ontologies are much larger, more sophisticated and more precise than this.)

This is a very simple ontology which demonstrates how relationships are graphically defined and represented. (Most ontologies are much larger, more sophisticated and more precise than this).

Ontologies are models which operate at a conceptual level and are intended to inform the development of models be used within systems.

Ontologies can be translated into knowledge graphs for particular systems, which define real-world objects and concepts within your organisation. Ideally all knowledge graphs within you organisation will refer back to the overarching ontology framework which provides consistency, efficiency and flexibility to manage how information is defined and structured.

Benefit of using ontologies with LLMs and RAG

Using ontologies with a RAG has clear advantages as it provides greater context to the LLM which in turn leads to better quality outputs. When using vectors alone, relationships are inferred as a result of mathematical proximity. With an ontology, the relationship between concepts is explicit. For example, a bird may frequently be close to a tree, but a bird is not a tree. This is a gross oversimplification to make a point, but vectors fail to define relationships where ontologies succeed.

This means that the context that ontologies provide LLMs is much more meaningful – leading to a much more powerful tool.

Structure matters

The structure of your data does have a clear impact on how well any AI tools you want to adopt or develop will perform. As a minimum, you will needs to have a good clear hierarchical structure for information you plan to use as a source for RAG. But really, before putting the time and energy into rolling out an AI tool, you need to consider how your data is structured, and particularly how ontology will support your RAG to make the exercise worthwhile.

Ontologies are something Metataxis has been developing for clients for many years and we have a wealth of experience in this area.